Initial User Research to counteract misinformation on social networks

Introduction

The Zappa project is funded by a grant from the Culture of Solidarity fund to support cross-border cultural initiatives of solidarity in times of uncertainty and "infodemic". The aim of this initial user research was to help guide development of a custom Bonfire extension to empower communities with a dedicated tool to deal with online misinformation.

Methodology

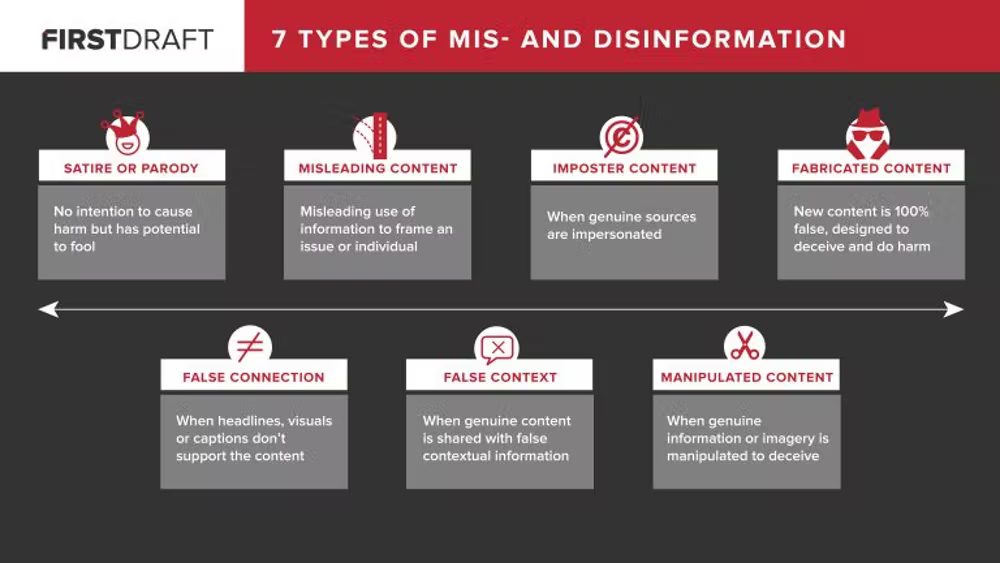

For the purposes of this research, we used a simple definition of misinformation as being information which is either incorrect or misleading but which is presented (or re-shared) as fact, Disinformation is on the same spectrum as misinformation, but the intention is to intentionally deceive.

We found a spectrum in the graphic below from First Draft as useful in helping frame the discussion with our user research participants.

Our user research participants were volunteers who we recruited through our networks - either directly through an existing relationship, a referral from a contact, or a response to a general request sent out via the Fediverse.

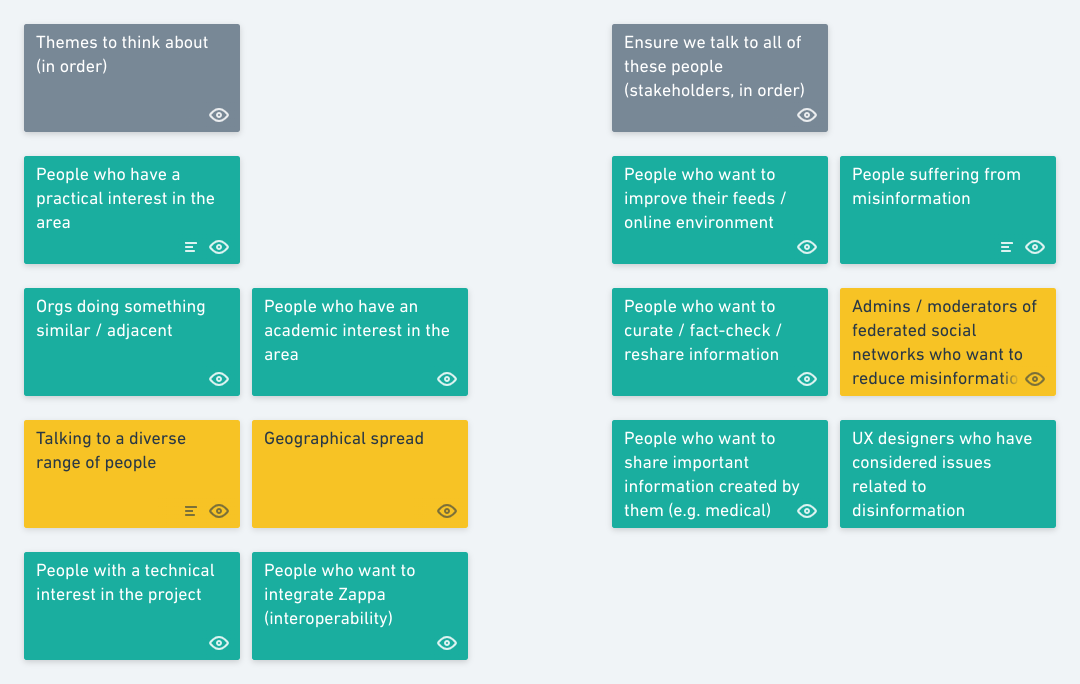

We outlined the kinds of people who we wanted to speak with through some initial brainstorming, which was refined after talking to our first couple of user resarch participants. The board below shows who we wanted to talk with and the themes we wanted to cover.

Over the four-week user research period we conducted 10 user research interviews via video conference and, in addition, had one participant who preferred to answer our questions asynchronously via email.

As the screenshot of our board above demonstrates, we were aiming to talk to a wide range of stakeholders and cover a number of themes. The boxes are prioritised from top to bottom under each grey box, with for example “people who have a practical interest in the area” being given greater priority than “people with a technical interest in the project”.

The green boxes indicate those groups or themes we believe that we covered during the user research process, with those in yellow being those we do not believe we fully covered.

Findings

Our research opened our eyes to important work being done by individuals and organisations, primarily in Europe and North America. Two user research participants also discussed work being done in South America, and one mentioned a project with an organisation based in Asia.

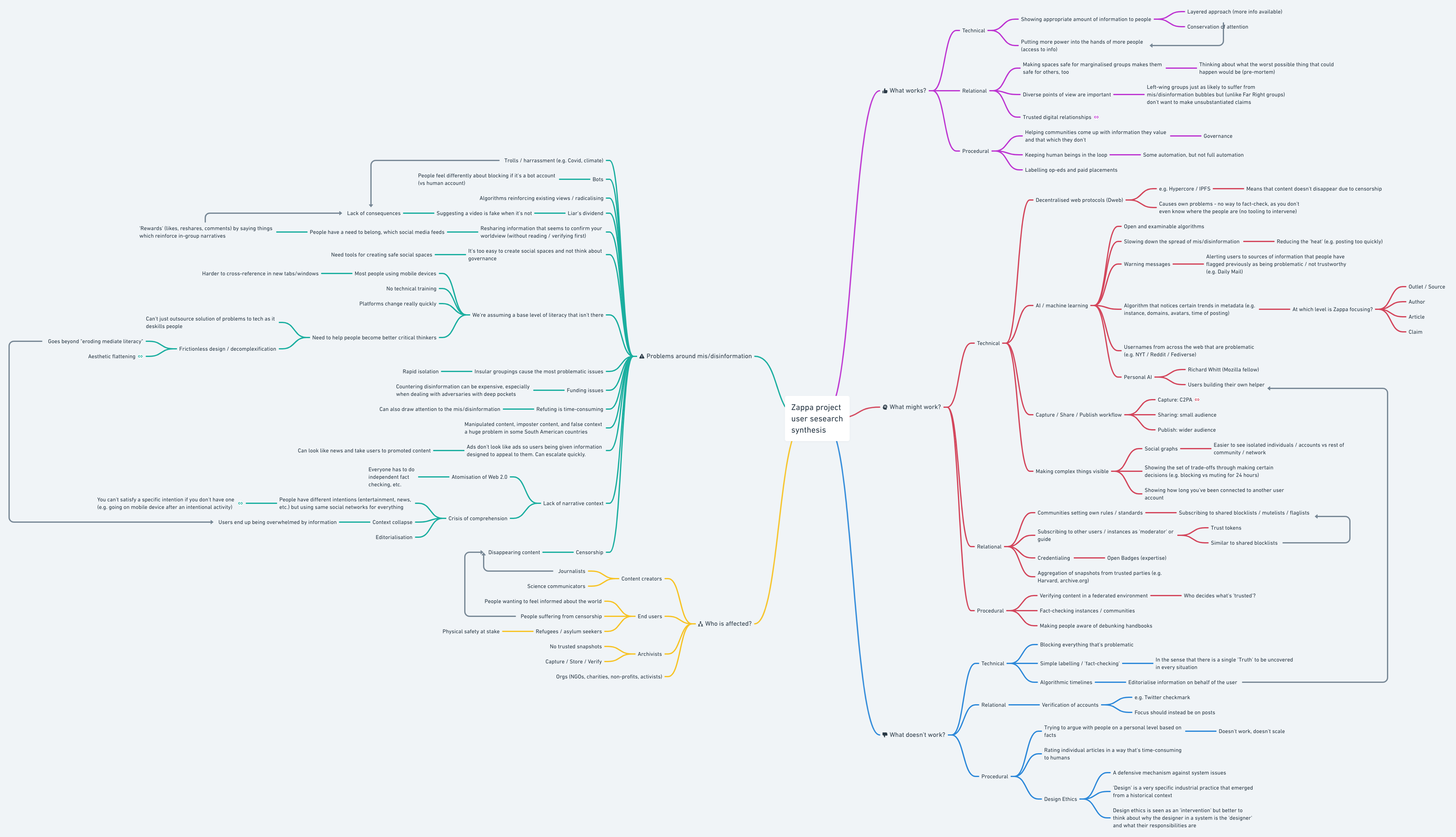

The mindmap below shows some of the most relevant findings from our research, detailing problems around misinformation, who is affected by it, as well as what works, what might work, and what doesn’t work. We were particularly grateful for one user research participant suggesting a categorisation based on technical, relational, and procedural solutions, which they are using in their work.

Broadly speaking, our main findings were that:

- Blocking - there is a broad assumption among users that technical solutions such as blocking problematic accounts is an effective solution. However, those who have thought about this more deeply recognise that this approach misses the bigger picture, deals with symptoms rather than causes, and simply pushes the problem elsewhere. That being said, blocking and/or muting individual user accounts and instances is still an important tool in dealing with misinformation. Having tools which go beyond a simple binary block/unblock would help users manage this process.

- Verification - the advent of verified accounts is problematic when it comes to misinformation as even verified accounts can publish misinformation. As a result, verification should happen on the level of individual posts rather than accounts.

- Labelling - rating systems and fact-checking can be extremely time-consuming. It can also lead to unintended consequences such as drawing attention to the misinformation. A more promising approach is to label persistent sources of misinformation so that users are warned and can make up their own mind.

- Algorithms - there are many ways to apply AI, machine learning, and algorithms to social networks. Centralised, proprietary, for-profit social networks such as Twitter and Instagram use closed algorithms to curate information on behalf of users. These can amplify problematic content for the sake of engagement (and related advertising dollars). Instead, a “personal AI” or algorithm which is open to be changed by users and to help them could be a promising idea to pursue.

- Moderation - there will always be a need for moderators within social networks, to enforce community standards, to resolve disputes, and to deal with misinformation. The visibility of moderation is a balance between burdening users with additional information and keeping them informed about decisions being made on their behalf. Layering information so that users can go deeper should they wish is a potential avenue to explore through UX design.

- Bots - people feel different (less hesitant) in blocking non-human user accounts, for example if they are ‘sock puppet’ accounts from bad actors.

- Consequences - poor behaviour online is often tied to a lack of consequences for a user’s actions, for example in sharing misinformation or trolling.

- Governance - creating social spaces is much easier than thinking about the governance it takes to make them safe spaces and/or ensure their sustainability.

- Literacy - there has not only been an erosion of media literacy through ‘frictionless design’ and an intention not to make users think, but ‘context collapse’ overwhelms users who have neither the conceptual tools nor technical skills to cope.

- Censorship - this is not only a problem of not giving particular users access to networks or resources, but also of causing resources to disappear. This has a knock-on effect of some users (e.g. journalists) being distrusted when the information on which they rely no longer exists.

In addition, we discovered that:

Reccomendations

Based on this initial user research, we recommend these initial actions:

A) Use a multi-pronged approach

As one user research participant mentioned explicitly, and several alluded to, there is no way to ‘solve’ or ‘fix’ misinformation - either online or offline. Consequently, approaching the problem holistically with a range of interventions is likely to work best. These interventions may cover ‘technical’, ‘reputational’ and ‘process-based’ approaches, and include:- Automatic labelling of problematic third-party sources of information based on a list curated by instance admins/moderators. Reddit, for example, already does this with The Daily Mail, a UK news outlet.

- Opt-in blocklists to which users can subscribe. From a technical point of view, these may actually be ‘flaglists’ or ‘mutelists’, and save end users from some of the burden of manual curation.

- UX design that allows users to reveal information on-demand, rather than by default. For example, users may be able to see a source or post is contested without being presented with all of the details by default.

B) Ensure bot accounts are clearly identifed

Users interact with bots differently than with accounts they know to have humans behind them. By ensuring that bot accounts are labelled as such users are free to make informed decisions about the range of actions they have such as unfollowing, muting, blocking, and reporting.

C) Experimenting with user-controlled machine learning

The notion of a ‘personal AI’ is a powerful one and could be based on a number of variables. This could include the personal AI, for example suggesting:

- Source/outlet - third-party outlets that users may:

- be interested in based on their network’s activity

- not want to see links to in their feeds due to their network’s blocking/muting/flagging behaviour

- Keywords - words and hashtags that users may:

- be interested in based on their network’s activity

- wish to hide, either temporarily or permanently, based on their network’s behaviour

- Accounts - user accounts that users may:

- wish to follow based on their network’s follows

- wish to mute, rate-limit, or block based on their network’s blocking/muting/flagging behaviour

- Create a Venn diagram showing overlap between technical, relational, and procedural approaches

- Brainstorm some potential interventions based on the Venn diagram

- Redraft report based on further research

This could be extended to other metadata available to the system, such as preferring some instances over others, interacting with user accounts or timelines differently at different times of the day, or visualising a user’s social graph.

D) Carry out more user research with under-represented groups

This initial user research was skewed towards white, male participants between the ages of 25 and 50, with two exceptions. In addition, we did not manage to talk with existing administrators or moderators of Fediverse instances, nor with anyone currently residing in Asia or Africa.

We therefore acknowledge and regret the limitations of the data obtained from this initial user research and suggest that further research and/org testing take place in the next few months. In particular, this should focus on people who do not identify as white, male, and people who live in the Global South.

E) Carry out desk research to follow-up promising leads

A number of websites, initiatives, and individuals were mentioned by user research participants. Two days of desk research would enable these to be collated and other, related projects mapped and contacted.

Next steps

After feedback on this report from the Bonfire team, the next steps are to:

References / thanks

We’d like to thank our individual user research participants: anubis2814, Benedict, Kevin, Mariha, Phevos, and Victor. In addition, our thanks to representatives from The New Design Congress, Niboe, Simply Secure, and WITNESS.